The rapid advancements in artificial intelligence (AI) have significantly transformed the cybersecurity landscape. While AI has introduced new opportunities for cybercriminals to execute crimes at unprecedented scales and speeds, it has also enhanced the capabilities of organizations, including those regulated by the Department of Financial Services (DFS), to prevent cyberattacks, improve threat detection, and refine incident response strategies. This guidance aims to assist Covered Entities in understanding the cybersecurity risks associated with AI and the controls that can be implemented to mitigate these risks.

For more detailed information, you can access the full letter here.

Understanding the Risks of AI in Cybersecurity

The integration of AI into cybersecurity practices brings forth various risks that are critical for Covered Entities to recognize and address. Below are some of the most concerning threats identified by cybersecurity experts, categorized into risks posed by threat actors utilizing AI and risks stemming from a Covered Entity's own reliance on AI.

AI-Enabled Social Engineering Enables Personalized, Convincing Phishing Attacks

One of the most pressing threats to the financial services sector is AI-enabled social engineering. Traditional social engineering tactics have evolved with the advent of AI, allowing threat actors to create highly personalized and sophisticated content that is more convincing than previous attempts. Key aspects include:

- Deepfakes: Threat actors leverage AI to produce realistic audio, video, and text deepfakes, targeting individuals through various channels such as email (phishing), phone (vishing), and text (SMiShing).

- Information Disclosure: These AI-driven attacks often manipulate employees into divulging sensitive information or taking unauthorized actions, such as transferring funds to fraudulent accounts.

- Bypassing Biometric Verification: Deepfakes can mimic an individual's appearance or voice, enabling threat actors to circumvent biometric security measures.

AI-Enhanced Cybersecurity Attacks Increase Speed and Accessibility of Attacks

AI also amplifies the effectiveness of existing cyberattacks, presenting a significant risk to Covered Entities. The capabilities of AI allow threat actors to:

- Rapidly Identify Vulnerabilities: AI can quickly scan vast amounts of information to identify and exploit security weaknesses, allowing for faster access to Information Systems.

- Conduct Reconnaissance: Once inside an organization's systems, AI can be used to strategize the deployment of malware and the exfiltration of Nonpublic Information (NPI).

- Develop New Malware: AI accelerates the creation of malware variants that can evade detection by existing security measures.

The increasing availability of AI-enabled tools lowers the barrier for less technically skilled threat actors, potentially leading to a surge in cyberattacks, particularly in sectors that handle sensitive NPI.

AI Trained with NPI Heightens Exposure Risk and Impact

AI systems often require the collection and processing of substantial data, including Nonpublic Information (NPI), which heightens the risk of exposure. Key considerations include:

- Data Protection Challenges: The accumulation of NPI necessitates robust data protection measures, as the potential for financial gain incentivizes threat actors to target these entities.

- Biometric Data Vulnerabilities: AI applications that utilize biometric data can be exploited by threat actors to impersonate Authorized Users and bypass Multi-Factor Authentication (MFA).

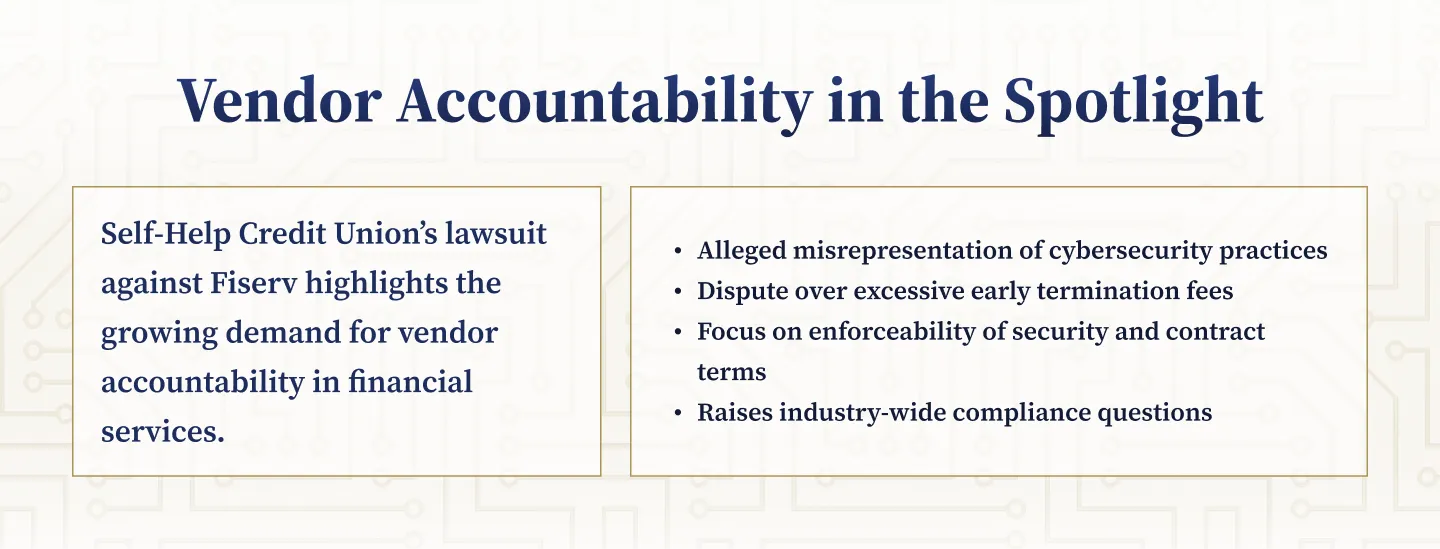

Third-Party Service Providers Increase AI Risk Exposure

The reliance on third-party vendors and suppliers introduces additional vulnerabilities. AI-powered tools often depend on extensive data collection, which involves collaboration with Third-Party Service Providers (TPSPs). Concerns include:

- Supply Chain Risks: A cybersecurity incident affecting a TPSP can expose a Covered Entity's NPI and serve as an entry point for broader attacks.

- Vendor Management: Ensuring that TPSPs adhere to stringent cybersecurity protocols is essential for safeguarding sensitive information.

%201%20(1).svg)

%201.svg)

THE GOLD STANDARD INCybersecurity and Regulatory Compliance

Implementing Controls and Measures to Mitigate AI-Related Threats

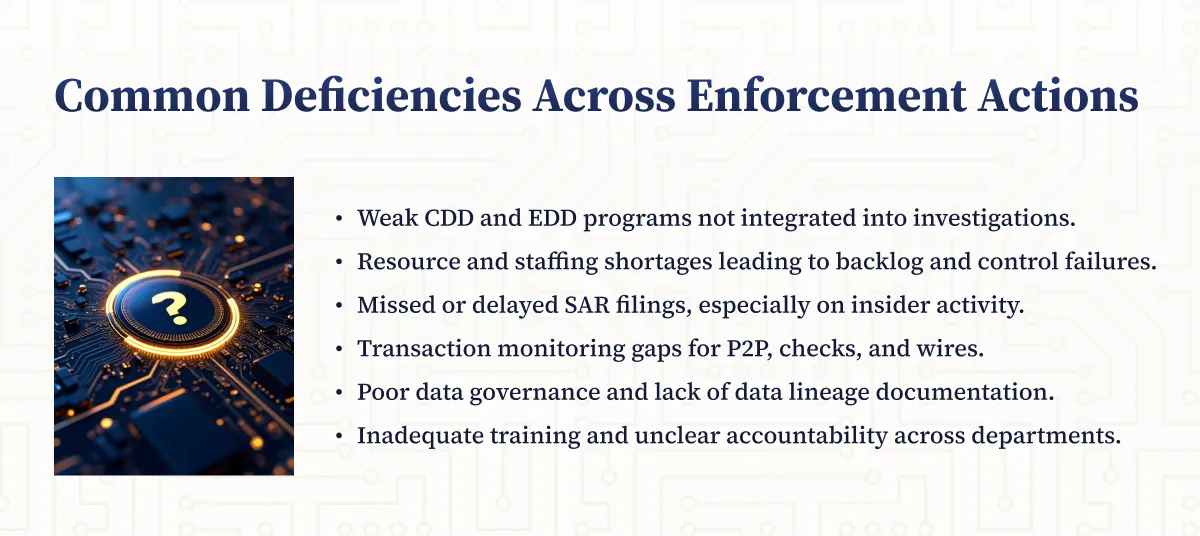

To address the cybersecurity risks associated with AI, Covered Entities must adhere to the Cybersecurity Regulation, which mandates the assessment of risks and the implementation of minimum cybersecurity standards. Below are effective controls and measures that can be employed to combat AI-related threats.

Risk Assessments and Risk-Based Programs

Covered Entities are required to maintain cybersecurity programs that are informed by comprehensive Risk Assessments. These assessments should:

- Evaluate AI-Related Risks: Address the risks posed by both the organization's use of AI and the technologies utilized by TPSPs.

- Update Regularly: Risk Assessments must be updated at least annually or whenever significant changes occur in the business or technology landscape.

Third-Party Service Provider Management

To mitigate risks associated with TPSPs, Covered Entities should implement robust policies that include:

- Due Diligence Procedures: Conduct thorough evaluations of TPSPs before granting access to Information Systems or NPI.

- Access Control Guidelines: Establish minimum requirements for access controls, encryption, and contractual protections to safeguard sensitive information.

Access Controls

Implementing stringent access controls is critical for preventing unauthorized access to Information Systems. Key measures include:

- Multi-Factor Authentication (MFA): As mandated by the Cybersecurity Regulation, MFA must be utilized for all Authorized Users accessing Information Systems. Effective MFA strategies should avoid authentication methods vulnerable to AI manipulation, such as SMS or voice verification.

- Periodic Access Reviews: Regularly review access privileges to ensure that users have only the necessary permissions required for their roles.

Cybersecurity Training

Training is a vital component of a comprehensive cybersecurity strategy. Covered Entities must provide:

- Awareness Training: Ensure all personnel understand the risks posed by AI and the procedures in place to mitigate these risks.

- Specialized Training for Cybersecurity Personnel: Equip cybersecurity staff with knowledge on AI-enhanced attacks and defensive strategies.

Monitoring

To detect unauthorized access and identify security vulnerabilities, Covered Entities must establish a robust monitoring process that includes:

- User Activity Monitoring: Track the activities of Authorized Users and monitor email and web traffic to block malicious content.

- AI Query Monitoring: For entities using AI products, monitor for unusual query behaviors that may indicate attempts to extract NPI.

Data Management

Effective data management practices are essential for minimizing exposure risks. Covered Entities should:

- Implement Data Minimization Practices: Dispose of NPI that is no longer necessary for business operations to reduce the impact of potential data breaches.

- Maintain Data Inventories: Track NPI to assess potential risks and ensure compliance with data protection regulations.

Incident Response and Business Continuity Plans

Covered Entities must develop and maintain comprehensive incident response and business continuity plans that are designed to address all types of cybersecurity events, including those related to AI. These plans should:

- Include Proactive Measures: Establish protocols for investigating and mitigating cybersecurity incidents.

- Involve Senior Leadership: Ensure that the Senior Governing Body is engaged in cybersecurity oversight and risk management.

By implementing these controls and measures, Covered Entities can better navigate the evolving cybersecurity landscape shaped by advancements in AI, safeguarding their Information Systems and protecting sensitive NPI from potential threats.

How NETBankAudit Can Support Your Security Strategies

NETBankAudit stands at the forefront of helping financial institutions navigate the complexities of AI-related cybersecurity risks. Our extensive suite of services includes:

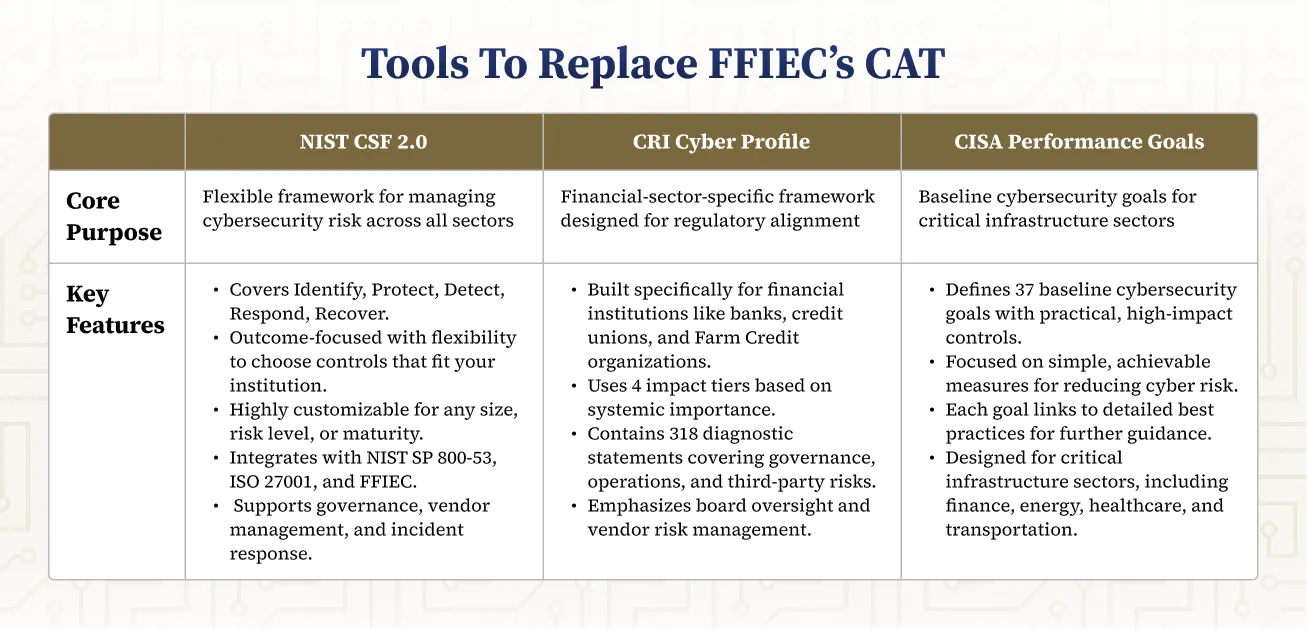

- Risk Assessment Services: Leveraging industry frameworks such as NIST and FFIEC, our experts conduct thorough evaluations of AI systems, identifying vulnerabilities and ensuring compliance with regulatory requirements.

- Cybersecurity Controls Assessments: We assess and enhance the effectiveness of cybersecurity controls tailored to your institution's specific risk landscape.

- Training and Awareness Programs: Our custom training programs enhance AI literacy among your employees, addressing the human element in your cybersecurity strategy.

- Third-Party Vendor Risk Management: NETBankAudit offers robust vendor management solutions to ensure that any partnerships concerning AI technologies maintain high security standards.

As financial institutions continue to integrate AI into their operations, the importance of a proactive and informed cybersecurity posture cannot be overstated. To learn more about how NETBankAudit can help safeguard your institution against AI-related risks, please ask a question to our expert team.

.avif)

.svg)

.webp)

.webp)

.png)

.webp)

.webp)

.webp)

.webp)

%201.webp)

.webp)

%20(3).webp)

.webp)

%20Works.webp)

.webp)

.webp)

%20(1).webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

%201.svg)